Google Coral USB Accelerator performance with Raspberry Pi 3B, 3A+, 4B

Google Coral USB accelerator is a device that can be attached to a computer for speeding up inferencing process in Machine Learning projects. It acts as a coprocessor and provides hardware acceleration for Neural Networks. It makes Inferencing process 10 times faster.

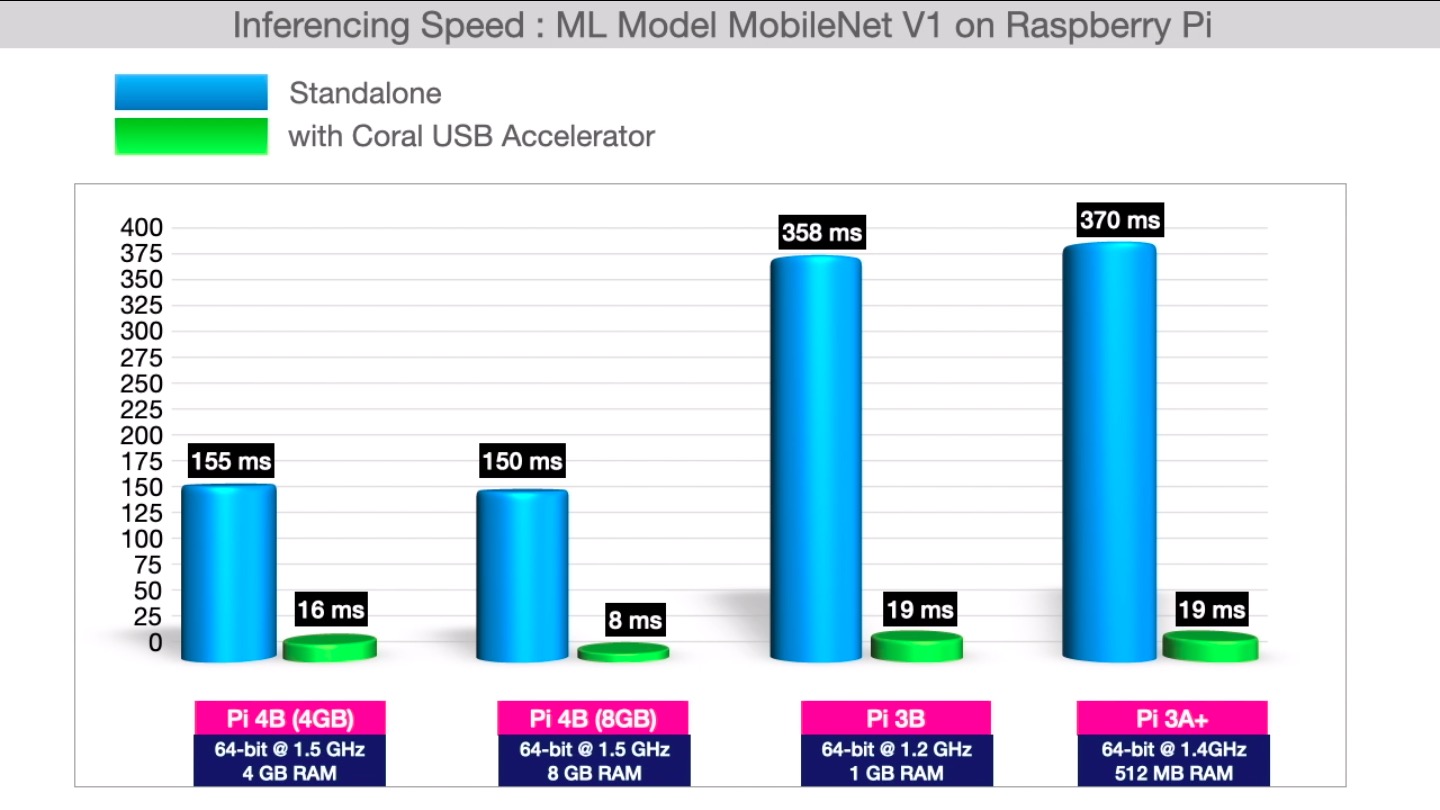

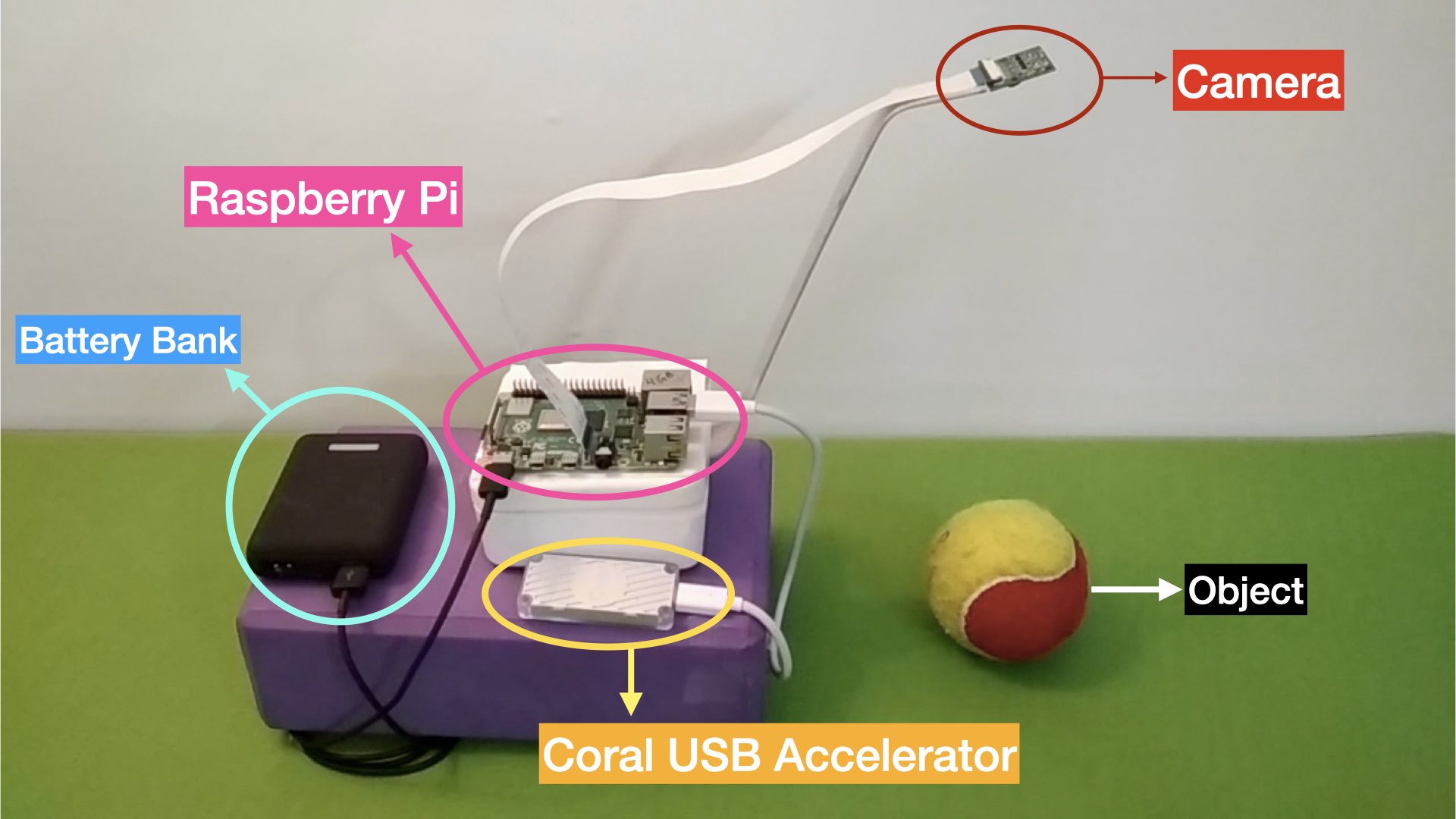

This experiment is about measuring the performance of 4 models (Pi 4 4GB & 8GB , Pi 3B, Pi 3A+) of Raspberry Pi. The performance is measured with and without Coral USB accelerator. Same set of Python scripts (Test Code) are used to perform image classification using a Machine Learning Model (MobileNet V1) on all the models. This is achieved by switching the same micro SD card between the different variants. The setup is shown in the picture below.

Before we can plug the Coral USB Accelerator to Raspberry Pi, we need to install certain dependencies. These are brought out very clearly on the official Coral website. The link is here https://coral.ai/docs/accelerator/get-started.

Just follow the instructions present on the link and you will be good to go to use the coral hardware with Raspberry Pi.

Testing Coral USB Accelerator

The test scripts used in this experiment can be downloaded from this github link.

There are two Python scripts, two model files and a label file in the folder. The two Python scripts are:-

=> classify.py : It works with model file 'mobilenet_v1_1.0_224_quant.tflite' and does not make use of the Coral USB accelerator.

=> classify_coral.py: It works with model file 'mobilenet_v1_1.0_224_quant_edgetpu.tflite' and makes use of the Coral USB accelerator. This file is identical to 'classify.py' except the minor modifications which are incorporated to make it work with Coral USB accelerator. The modifications are as follows:-

1. Import load_delgate from tflite_runtime.interpreter

2. Change the path of model file and make it point to the 'edgetpu' model file

3. Make interpreter with 'load_delegate' function.

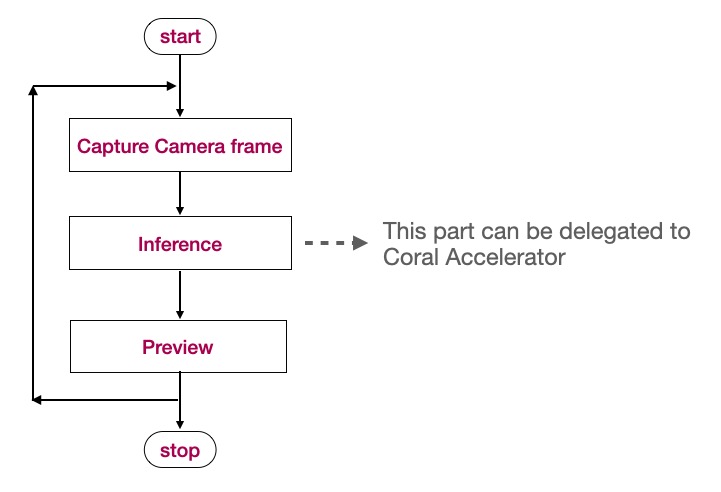

The details are covered in the Code Walkthorugh section. The basic tasks performed by both the scripts are as shown below.

'Camera capture' and 'Preview' involves getting a picture frame from the camera and displaying it on a output window with suitable annotations. There are multiple methods to do these tasks efficiently and minimise the processing time. One such method is to perform the camera related task through OpenCV.

'Inference' involves obtaining predictions from the model file based on the input image. The time taken in this step depends upon the model file being used. Inference time may vary from model to model depending upon how many classes it has. Without any external hardware acceleration, this task is performed by the CPU and devours the precious processor resources. In order to build applications that employ a machine learning model for a real-time use case, it is imperative that the inferencing time must be as low as possible to get maximum FPS.

The Python script 'classify_coral.py' delegates the inferencing part to the Coral USB Accelerator and brings down the processing time drastically. The observations of time taken by the various models of Raspberry Pi are brought out in the next section.

The Result Summary

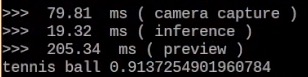

The results obtained from running the test scripts are summarised here. While running the scripts, the time taken by three tasks (camera capture, inference, preview) varies with every frame. Snap shot of average case is shown in the results.

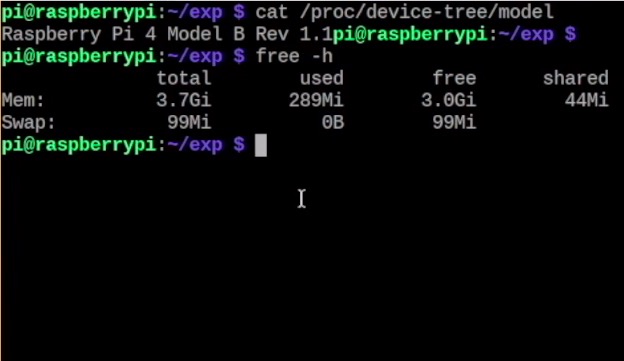

Raspberry Pi 4B (4GB)

|

CPU: 64 bit quad-core @ 1.5 GHz RAM: 4 GB |

Commands verifying Raspberry Pi version |

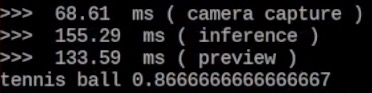

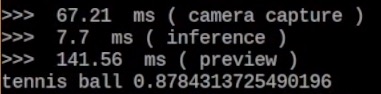

Without Coral Accelerator (results of running 'classify.py')

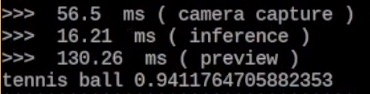

With Coral Accelerator (results of running 'classify_coral.py')

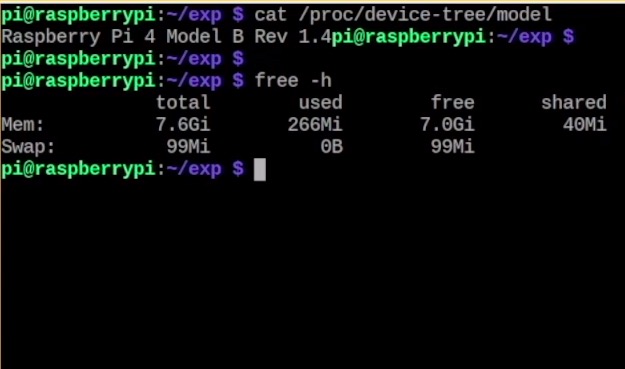

Raspberry Pi 4B (8GB)

|

CPU: 64 bit quad-core @ 1.5 GHz RAM: 8 GB |

Commands verifying Raspberry Pi version |

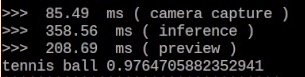

Without Coral Accelerator (results of running 'classify.py')

With Coral Accelerator (results of running 'classify_coral.py')

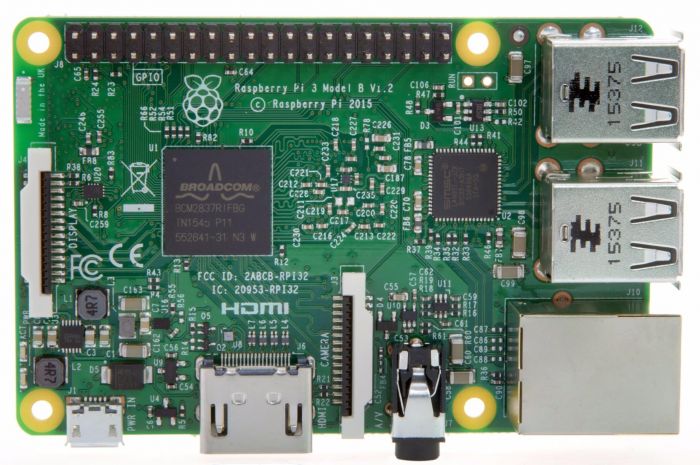

Raspberry Pi 3B

|

CPU: 64 bit quad-core @ 1.2GHz RAM: 1 GB |

Commands verifying Raspberry Pi version |

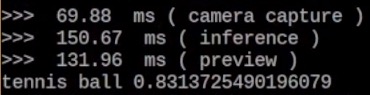

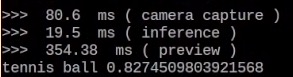

Without Coral Accelerator (results of running 'classify.py')

With Coral Accelerator (results of running 'classify_coral.py')

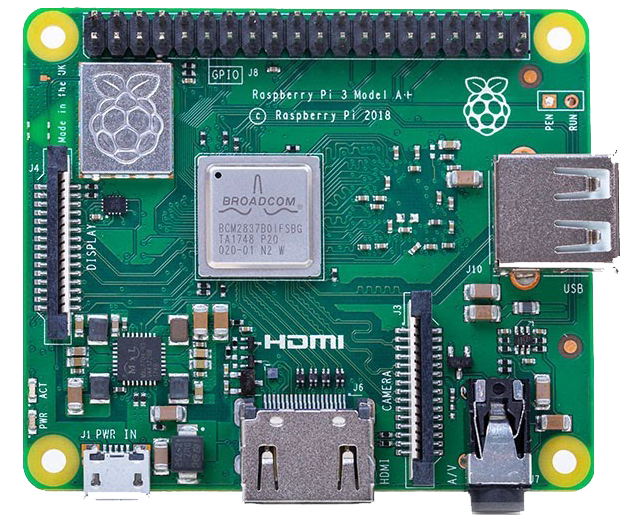

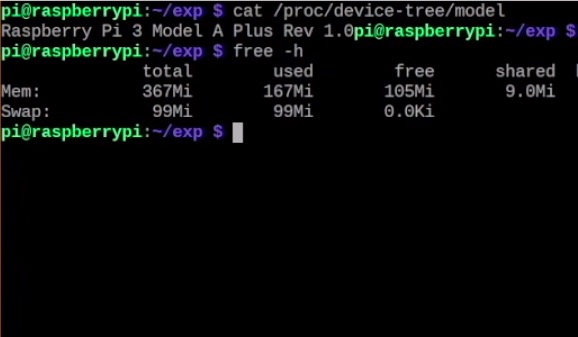

Raspberry Pi 3A+

|

CPU: 64 bit quad-core @ 1.4 GHz RAM: 512 MB |

Commands verifying Raspberry Pi version |

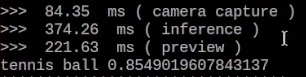

Without Coral Accelerator (results of running 'classify.py')

With Coral Accelerator (results of running 'classify_coral.py')

In all the above cases, we can see the drastic reduction in the inference time upon invoking Coral hardware. However, 'camera capture' and 'preview' still take the same amount of time because only the inferencing part is processed inside Coral hardware.

An overview of the results is provided by this graph.

Code Walkthrough

Since both the test scripts are identical, I will cover 'classify_coral.py'. Notice the modifications which you need to incorporate in any script to make it compatible with Coral hardware. The modifications are highlighted with green background.

In the import section, load_delegate needs to be imported for Coral Hardware

from tflite_runtime.interpreter import load_delegate

Before we get into the forever loop of performing the three tasks shown in the flowchart above, we need to initialise the interpreter and load the model into it. We start with selecting the model file. Here we need to specify the model file that is compiled for edgetpu. The Coral hardware won't work with the model file that is not compiled for edgetpu.

#this path needs to be changed to 'mobilenet_v1_1.0_224_quant.tflite' if the coral hardware is not used

model_path = "mobilenet_v1_1.0_224_quant_edgetpu.tflite"

#label file is common to both the models

label_path = "labels_mobilenet_quant_v1_224.txt"

Now we use this model file to instantiate the interpreter. Here the 'experimental_delegates' parameter indicates that we want to delegate the inferencing part to Coral hardware.

interpreter = Interpreter(model_path=model_path, experimental_delegates=[load_delegate('libedgetpu.so.1.0')])

# if not using Coral hardware, the experimental_delegates parameter is not required

#and the above line of code can be written as 'interpreter = Interpreter(model_path=model_path)' only

All the modifications that are required for using Coral Hardware have been covered so far. Once the interpreter is made, we go on to allocate the tensors and use them for our purpose.

#allocate tensors

interpreter.allocate_tensors()

# Get input and output tensors.

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

#read the label file

with open(label_path, 'r') as f:

labels = list(map(str.strip, f.readlines()))

#prediction threshold for triggering actions

threshold=0.5

#for every prediction the model will return 1000 probabilities as there are 1000 classes. We need to specify how many results we would want to keep.

top_k_results = 2

Preview window is generated through Matplotlib. This window is updated with the current camera frame.

plt.ion()

plt.tight_layout()

fig = plt.gcf()

fig.canvas.set_window_title('TensorFlow Lite')

fig.suptitle('Image Classification')

ax = plt.gca()

ax.set_axis_off()

tmp = np.zeros([480,640] + [3], np.uint8)

preview = ax.imshow(tmp)

Post initialising the camera, the script loops continuously to perform the three tasks.

=> camera capture.

start_t1=time.time()

stream = np.empty((480, 640, 3), dtype=np.uint8)

camera.capture(stream, 'rgb',use_video_port=True) # capturing image directly as numpy array

img = scale_image(stream)

#scale_image() function returns the image after trimming it to the size that is required by the model

time_elapsed(start_t1,"camera capture") # prints the time taken by the processor while executing above lines

=> Inference

start_t2=time.time()

# making the data compatible for feeding into the input tensor. This done by adding a batch dimension

input_data = np.expand_dims(img, axis=0)

# feed data to input tensor

interpreter.set_tensor(input_details[0]['index'], input_data)

#run the interpreter

interpreter.invoke()

# Obtain results from the interpreter. Result comprises of labels and their associated probabilities.

predictions = interpreter.get_tensor(output_details[0]['index'])[0]

# Get indices of the top k results

top_k_indices = np.argsort(predictions)[::-1][:top_k_results]

# Getting the result with maximum score

pred_max=predictions[top_k_indices[0]]/255.0

lbl_max=labels[top_k_indices[0]]

#take action based on maximum prediction value. If the maximum score crosses the threshold then annotate the camera image, otherwise keep it blank.

if (pred_max < threshold):

camera.annotate_text = "___"

if (pred_max >= threshold):

percent=round(pred_max*100)

txt= " " + lbl_max + " (" + str(percent) + "%)"

camera.annotate_text = txt

time_elapsed(start_t2,"inference") #print the time taken in all the above steps

=> Preview

start_t3=time.time()

#update the camera preview window

preview.set_data(stream)

fig.canvas.get_tk_widget().update()

time_elapsed(start_t3,"preview") # print time taken in above steps

Comments

Thank you for the experiment. There is one more paramters that would have been very interesting to measure - the overall CPU load. Running Tensorflow on the CPU is incredibly expensive (object detection on a single camera uses about 73% CPU for a single HD camera at 5 FPS in a RPi 4B 4Gb). A Coral USB is bound to reduce that dramatically, which also includes the overall CPU temperature long term. It would be really interesting to see a part 2 where you evaluate that aspect as well. Thank you for the time you dedicated to this - it is really useful.

Hi Sudhanshu,

You can try various buy options given here.. https://coral.ai/products/accelerator/

I got it through someone in US