Model Garden - Testing 20+ Machine Learning models on Raspberry Pi

Model Garden is the name of the tool which has been created to test multiple Machine Learning models on Raspberry Pi . This project received 'TensorFlow Community Spotlight' winner award for the month Jun 2021.

Google has provided a variety of pre-trained Machine Learning Models under the computer vision category. These models are known as Inception and Mobilenets. Multiple versions of these models have been released in the recent past. Inception is a Deep Convolutional Neural Network (CNN) that is primarily used for classifying images. MobileNets are also CNNs and optimised to run efficiently on edge devices such as Coral dev boards, Mobile Phones, Raspberry Pi. These are small sized models with low-latency and high accuracy.

A complete list of the pre-trained models provided by the Google coral team can be accessed here. Some of the computer vision models have been bundled together as canned models and can be downloaded as a package through this link.

https://dl.google.com/coral/canned_models/all_models.tar.gz.

Canned models comprise of following model and label files:-

|

Type |

Model file |

Label file |

Description |

|

Image Classification |

inception_v1_224_quant_edgetpu.tflite |

imagenet_labels.txt |

These models can classify upto 1000 different types of objects as mentioned in the label file. This label file is common for all these models. For a given input image, the accuracy and latency is different for each of these models. |

|

inception_v2_224_quant_edgetpu.tflite |

|||

|

inception_v3_299_quant_edgetpu.tflite |

|||

|

inception_v4_299_quant_edgetpu.tflite |

|||

|

mobilenet_v1_1.0_224_quant_edgetpu.tflite |

|||

|

mobilenet_v2_1.0_224_quant_edgetpu.tflite |

|||

|

mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite |

inat_bird_labels.txt |

This model can classify 900+ birds |

|

|

mobilenet_v2_1.0_224_inat_insect_quant_edgetpu.tflite |

inat_insect_labels.txt |

This model can classify 1000+ insects |

|

|

mobilenet_v2_1.0_224_inat_plant_quant_edgetpu.tflite |

inat_plant_labels.txt |

This model can classify 2100+ plants |

|

|

Object Detection |

mobilenet_ssd_v1_coco_quant_postprocess_edgetpu.tflite |

coco_labels.txt |

These models can locate upto 90 different type of objects in a picture frame |

|

mobilenet_ssd_v2_coco_quant_postprocess_edgetpu.tflite |

|||

|

mobilenet_ssd_v2_face_quant_postprocess_edgetpu.tflite |

coco_labels.txt |

This model can locate human faces in a picture frame |

Each of the above model files also have a version which is compiled to run on edgetpu (Coral USB Accelerator) and is present in canned models.

You can run these models on Raspberry Pi using example scripts provided by the coral team. However, prior to running examples, you need to install Tensorflow Lite interpreter and Coral Accelerator libraries on your Raspberry Pi.

While working with various examples, I found myself juggling with different scripts when i was trying to switch between classification and detection models as they have different processing logics. I wanted to switch them during run time using a single script. So, it occurred to me that it would be nice if i could combine the following capabilities into a single master script:-

- Flexibility of choosing different input devices such as Picamera or USB camera.

- Switch between 24 different models during runtime without stopping / restarting a script.

- Based on a model loaded in CPU / edgetpu, the script should dynamically process the input image through classification or detection logic.

- Attach / detach the Coral Accelerator during run time.

- The output of inference should be overlaid on the image and streamed over LAN. So that it could be viewed on a browser.

The project folder contains a python script “model_garden.py” which does all the tasks mentioned above. The folder also contains the code for a simple web interface that displays the streaming output of the python script along with buttons to send commands to the script to load a different model during run time.

This tool can be useful for quick demonstration of all the canned models and can be easily scaled up to accommodate more models.

Configure your Raspberry Pi to Run this project

In order to make things simpler for beginners, I created a bash script which can configure a Raspberry Pi in all respects to run this project. Download this bash script (right click and save) on your Raspberry Pi and run it using command "sudo sh install.sh". This bash script will download all the necessary packages, libraries, models and source code required to run this tool on your device.

The bash script performs following actions on your Raspberry Pi automatically:-

- Update & upgrade Raspberry Pi OS

- Install Apache Webserver and PHP

- Install Tensorflow Lite and Google Coral USB Accelerator Libraries

- Install OpenCV

- Download pre-trained Models from google coral repository

- Download the model_garden source code

- Move the models and code to the desired location in your Raspberry Pi and set permissions.

Testing the Machine Learning Models on Raspberry Pi

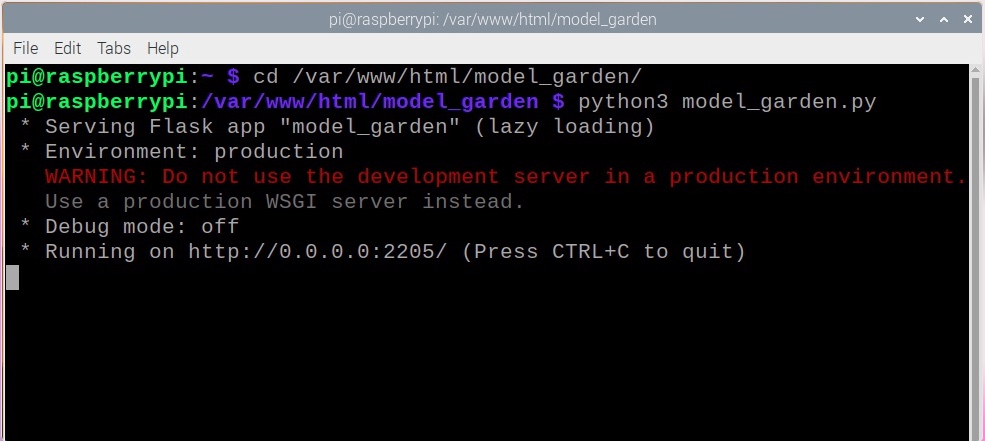

Post successful configuration of Raspberry Pi through bash script, you can find the project folder at location “/var/www/html/model_garden”. Now, open Terminal and go to the project folder and run the python script using command “python3 model_garden.py” as shown in picture below.

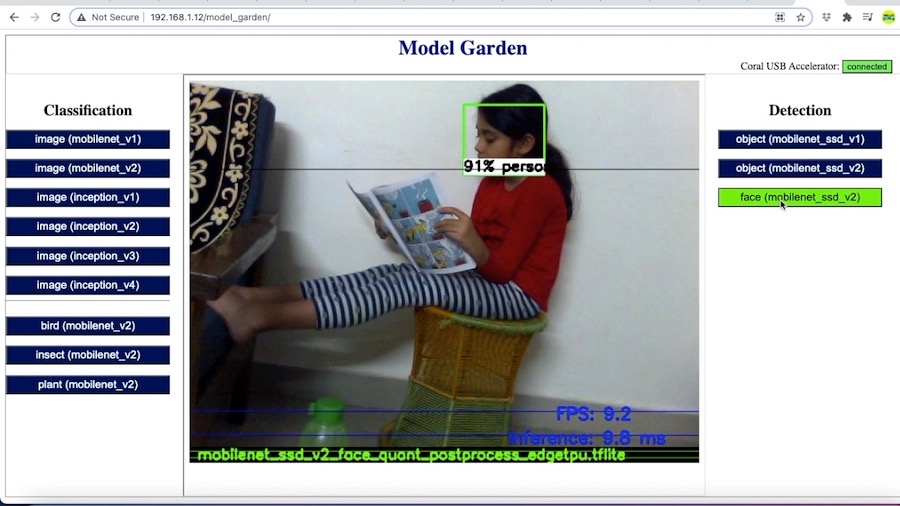

Once you see the above message on terminal, open a browser on a laptop connected to the same network and type the URL of the project folder. You will notice that the script has started running and the output generated is displayed on the terminal as well as on the Web GUI.

Now, by pressing the buttons on Web GUI, you can switch between the models during run time. Also, pressing the USB Coral Accelerator button (top right corner of GUI) loads the edgetpu version of the selected model on Coral Accelerator. This way you can quickly run all the 24 Machine Learning Models on Raspberry Pi and compare their performance. Observe, how the overlays on the output image change when you switch between an Image classification and Object Detection model.

Using a face detection model we can locate a human face in a picture as shown below.

These canned models also contain few models which are specific to a particular domain. Subjects of these models include insects, birds and plants. These models can classify upto 1000 insects, 900 birds and 2100 type of plants respectively. The respective label files of these models include scientific names of these subjects. In order to test these models, I downloaded 03 random images from the internet. One from each category (as shown below).

I had already checked the scientific names of these subjects while downloading their images. So during the test, I was hoping the models to show me these names. As expected, these images were correctly identified by the respective models. It was thrilling to see the models identifying them correctly. The picture shown below shows actual outputs of the respective models. The prediction with maximum confidence score is shown in red text on top of the image.

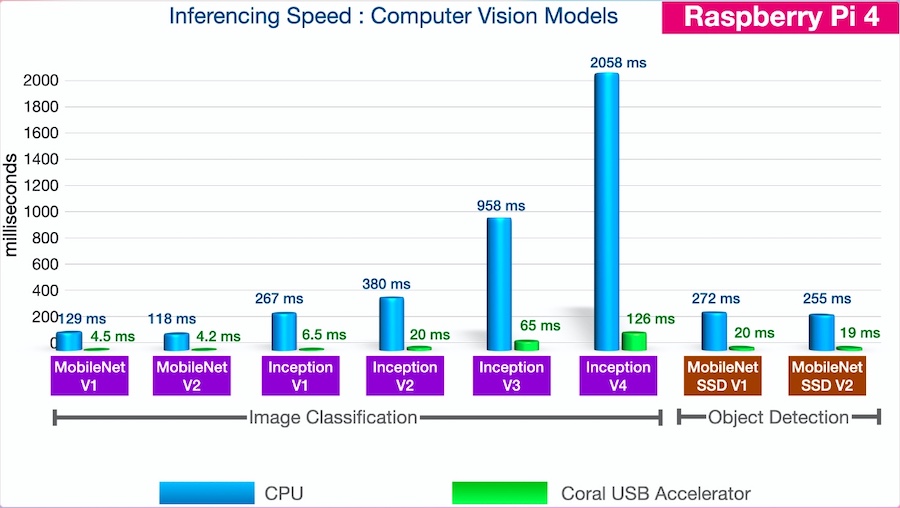

Raspberry Pi 4 Performance Graph

The results obtained from running the Machine Learning Models on Raspberry Pi 4 are as follows:-

Just by scrutinising the graph above we can infer following:-

- Inferencing speeds of MobileNets are quite fast even if they run on CPU instead of Coral USB Accelerator.

- Inference time increases as we go from Mobilenet V2 to Inception V4. For the same input image, the confidence of inception V4 was found to be maximum. However, it runs 20 times slower than Mobilenet v2.

- Running Inception 4 on Coral USB Accelerator increases the speed but it still remains as fast as a MobilenetV1 without any acceleration.

- Mobilenet V1 & V2 run fastest on Coral Accelerator with inference time under 5ms.

- Object Detection Model, Mobilenet SSD V2 runs marginally faster than Mobilenet SSD V1.

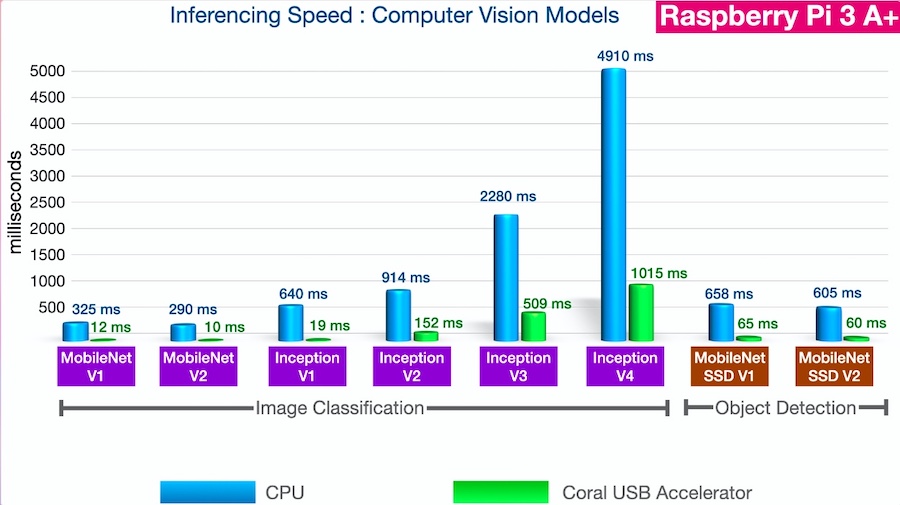

Raspberry Pi 3A+ Performance Graph

The results obtained for these Machine Learning Models on Raspberry Pi 3A+ are as follows:-

You can observe the same pattern with Raspberry Pi 3A+. However, the inference time in all the categories is much higher. This is due to its lesser computational power and RAM than Raspberry Pi 4.

You can try this project on your Raspberry Pi and let me know your experience in comments below.

Comments