AI Robot - Human Following Robot using TensorFlow Lite on Raspberry Pi

The article describes making of a Human Following Robot using Raspberry Pi. You need to install Tensorflow Lite runtime on the Raspberry Pi to run the code provided with this article.

|

|

The details of the hardware and software components used in the project is as follows:-

|

Raspberry Pi 3A+ |

The main computer of the Robot |

|

Coral USB Accelerator |

Hardware to speed up the inferencing process |

|

Pi Camera |

Raspberry Pi 5 MP camera board |

|

MobileNet SSD v2 (COCO) |

Machine Learning Model which can detect the location of 90 types of objects in a picture |

|

TensorFlow Lite interpreter |

Python APIs that enables on-device machine learning inference |

The hardware connections /circuit diagram of this human following robot has been covered while describing its making in this article. human following robot

Human Following Robot: An Overview

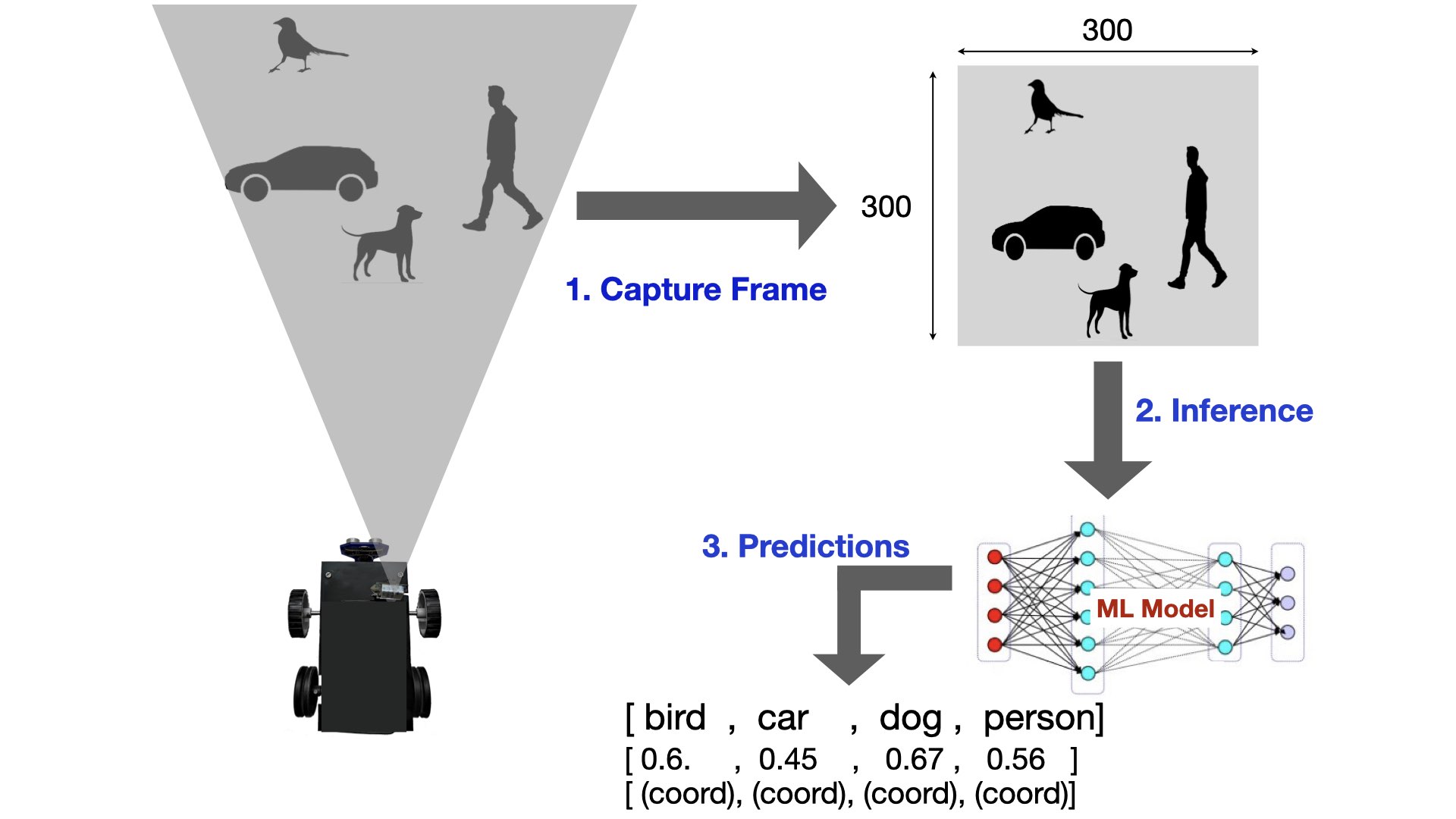

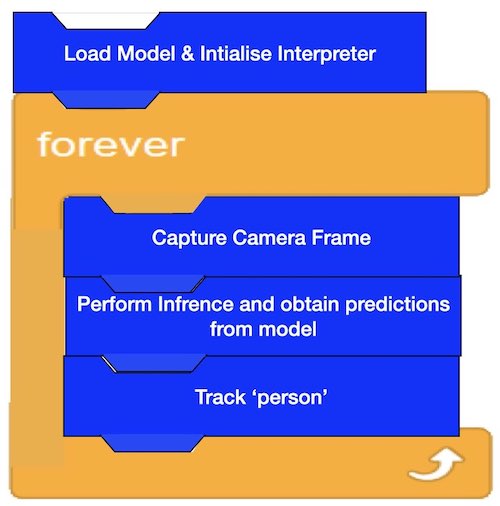

The robot uses its camera to capture the image and feeds the image to Object Detection Machine Learning Model. The model returns the list of objects. This list is traversed to see if 'person' is present. If 'person' is present, then the tracking code kicks in. An overview of the process is shown in the picture below.

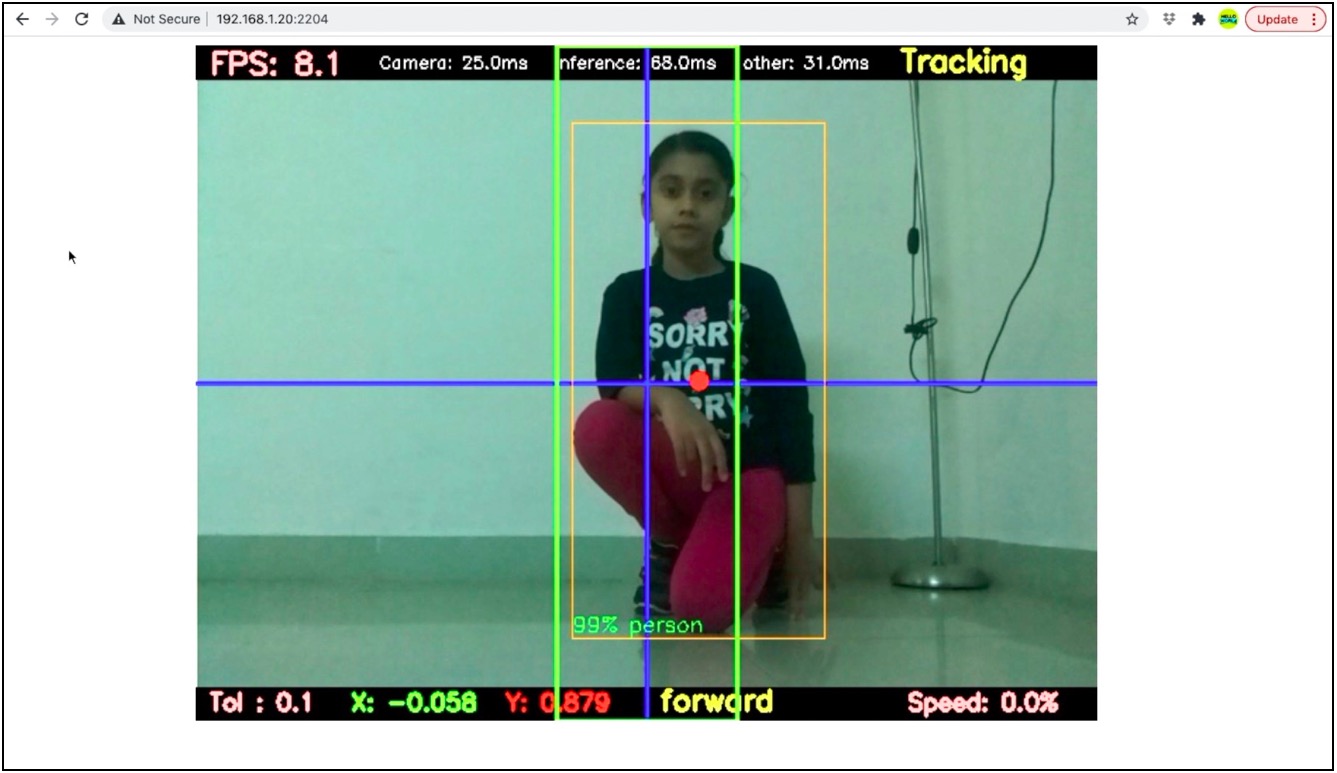

In order to appreciate this article, it is recommended that you keep referring the project code as you read through. In the code folder, both the files 'human_follower.py' and 'human_follower2.py' have the same purpose i.e. track a human and can be executed independently. The file 'human_follower.py' has extra lines of code for FLASK implementation and generation of overlays on the camera frame. The code pertaining to FLASK in this file streams the camera view along with custom overlays (created through OpenCV) over LAN. The streaming view can be monitored via a browser as shown in the picture below.

The file 'human_follower2.py' is a stripped down version and does not implement FLASK. It is the bare minimum implementation of a human following robot using an Object Detection Machine Learning model. You can refer to 'human_follower2.py' file while reading this article further.

How it works

The code of Human Following Robot begins with initialising certain parameters. These are explained in the comments below.

#OpenCV object to access camera

cap = cv2.VideoCapture(0)

#threshold of prediction probability (or score). The model will consider an object detected if its score is above this value

threshold=0.2

#keep the top 5 objects which cross the above threshold.

top_k=5

#this is set to 1 if Coral hardware is used for running the model file.

edgetpu=1

#path where the model file is located

model_dir = '/home/pi/Documents/all_models'

#model file

model_edgetpu = 'mobilenet_ssd_v2_coco_quant_postprocess_edgetpu.tflite'

#label file corresponding to the model file

lbl = 'coco_labels.txt'

# distance from center of frame on both sides. The object being tracked must be brought inside this zone while tracking

tolerance=0.1

# deviation of the center of object from center of frame

x_deviation=0

# Y coordinate of the bottom edge of the bounding box

y_max=0

# This is the name of the object which is required to be tracked by the code

object_to_track='person'

Inside the main function, the code begins with setting up the interpreter

interpreter, labels = cm.load_model(model_dir,model_edgetpu,lbl,edgetpu)

The function 'load_model()' is defined in the 'common.py' file. This function initialises the tflite interpreter based on the model and label files passed as the parameters. The variable 'edgetpu' is set to '1', which tells the function presence of Coral USB Accelerator. After initialising the interpreter, the code continuously loops to perform the three tasks as shown in the picture below.

The overall FPS depends upon the time taken by the three steps inside the loop. The breakdown of time taken by these steps is as follows:-

|

Capture Camera Frame |

20 ms approx |

|

Perform Inference and obtain Prediction |

60 ms approx |

|

track 'person' |

20 ms approx |

|

Total time |

100 ms |

|

FPS |

1/(0.1 s) = 10 |

The presence of Coral USB Accelerator speeds up the second step to a large extent. Without acceleration, this step could take upto 600-900ms, bringing down the FPS drastically. These values are measured for Raspberry Pi 3A+ which has 64 bit quad-core @ 1.4 GHz CPU and 512 MB RAM. The overall FPS can be improved further by using latest Raspberry Pi 4 model.

Lets see what happens in the three steps shown in the picture above.

1. Capture Frame. The code below captures a frame using Pi Camera, flips it horizontally & vertically and converts it in a format that is required by the Object Detection model. Then it feeds the frame to the input tensor of the interpreter.

ret, frame = cap.read()

if not ret:

break

cv2_im = frame

cv2_im = cv2.flip(cv2_im, 0) # Horizontal flip

cv2_im = cv2.flip(cv2_im, 1) # Vertical flip

cv2_im_rgb = cv2.cvtColor(cv2_im, cv2.COLOR_BGR2RGB) # change color scheme

pil_im = Image.fromarray(cv2_im_rgb) # convert into array

cm.set_input(interpreter, pil_im) # provide the frame as input to the interpreter

2. Inference. This one line of code does the job of interacting with model to obtain the list of predictions. This part is called 'inference' which takes up more than half of the processing time. Coral USB Accelerator is used to bring down this processing time.

interpreter.invoke()

3. Predictions. Once the above line completes execution, the output tensor of the interpreter holds the information with respect to the objects present in the frame. This information is accessed using 'get_output()' function (defined in the file 'common.py') as shown below.

objs = cm.get_output(interpreter, score_threshold=threshold, top_k=top_k)

The variable 'objs' holds following information with respect to each object:-

- Class Name

- Score (prediction probability)

- Coordinates of the bounding box

Tracking a Person

The list of objects along with the labels are passed to the track_object() function.

track_object(objs,labels) #tracking

The tracking function begins with checking if the list of objects is empty. If it is empty, the function returns and there is no further processing.

def track_object(objs,labels):

global x_deviation, y_max, tolerance

if(len(objs)==0):

print("no objects to track")

ut.stop()

ut.red_light("OFF")

return

If the list of objects is not empty, then one by one code checks whether the object name matches the desired object (i.e. person). In case, there is no match found, relevant message is printed and the function returns without proceeding further. If there is a match, then the code stores its coordinate information and proceeds further.

flag=0

for obj in objs:

lbl=labels.get(obj.id, obj.id)

if (lbl==object_to_track):

x_min, y_min, x_max, y_max = list(obj.bbox)

flag=1

break

#print(x_min, y_min, x_max, y_max)

if(flag==0):

print("selected object no present")

return

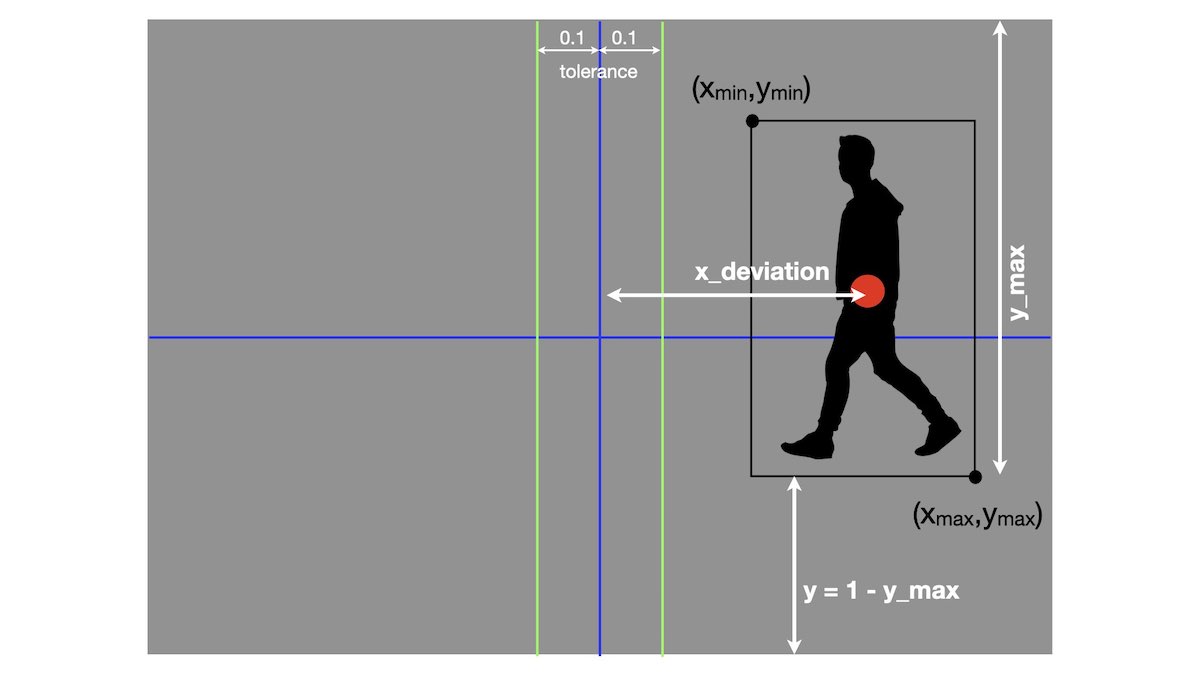

The below code is executed only if the object list is not empty and it contains a 'person'. Here, the code calculates the deviation of the person from the center of the frame as shown in picture.

x_diff=x_max-x_min

y_diff=y_max-y_min

obj_x_center=x_min+(x_diff/2)

obj_x_center=round(obj_x_center,3)

obj_y_center=y_min+(y_diff/2)

obj_y_center=round(obj_y_center,3)

x_deviation=round(0.5-obj_x_center,3)

y_max=round(y_max,3)

print("{",x_deviation,y_max,"}")

At this stage, the we know the exact position of the person inside the frame. Now, we can move the human following robot to bring the person in center. It is done by calling 'move_robot()' function inside a thread.

thread = Thread(target = move_robot)

thread.start()

Movement of Robot

Forward movement of the robot

Using the information calculated in track_object() function above, the code here simply checks if the center of 'person' is below the 'tolerance' value. If yes, the code checks if the distance between bottom of bounding box and bottom of frame is below the set margin. If yes, then there is no need to move. Otherwise, move forward.

def move_robot():

global x_deviation, y_max, tolerance

y=1-y_max #distance from bottom of the frame

if(abs(x_deviation)<tolerance):

if(y<0.1):

ut.red_light("ON")

ut.stop()

print("reached person...........")

else:

ut.red_light("OFF")

ut.forward()

print("moving robot ...FORWARD....!!!!!!!!!!!!!!")

Across movement of robot (Right - Left motion)

This part is executed whenever the 'person' moves out of the tolerance zone. The robot moves left or right to get the person in the center of frame.

else:

ut.red_light("OFF")

if(x_deviation>=tolerance):

delay1=get_delay(x_deviation)

ut.left()

time.sleep(delay1)

ut.stop()

print("moving robot ...Left....<<<<<<<<<<")

if(x_deviation<=-1*tolerance):

delay1=get_delay(x_deviation)

ut.right()

time.sleep(delay1)

ut.stop()

print("moving robot ...Right....>>>>>>>>")

I hope you enjoyed this article. If you have any questions, ask me in the comments below.

Comments

What OS was used for the project.. i used ubuntu 204 and having problems with RPIO and tensorflow lite. Its because of the root access requirement of RPIO but when i run the code it using sudo command tensorflow runtime is not to be found

You need to use Raspberry Pi Legacy OS

Can I use this code to raspberry pi 4?

Yes, you can use it on Pi 4

Thank you for your awesome code.

I love you.

Hi, if many persons are in front of your robot, how can your robot detect and follow the target person ???

Sir, have you tried implementing YOLO algorithm? if yes, then was there any major improvement in FPS without using the Coral accelerator?

Hi Aditya, Sorry I haven't tried YOLO. But i'm sure that there won't be major improvement without Coral Accelerator.

Hello Friend, Would you be able to add the PAN-TILT in the human follow-up? Because with camera movements improves the accuracy of tracking the man. could you add this code?

You need to have a fixed position of camera for this code. Tracking is done by moving the entire robot to get the person in the center of frame. PAN-TILT won't work with this code

its same as described here

https://helloworld.co.in/article/basic-robotics-make-robot-raspberry-pi-web-controls

Im also working on this project (college project) help me...PLZZ..i need ur guidence